People often ask me, an former optical physicist, how I pivoted into the field of machine learning. Similarly I get a lot of questions how I acquired the skills needed for the job within a year. This post is meant to address these common questions.

My journey of teaching myself machine learning started in Sep 2017, I explored many online resources, many of which were not very good. My objective was to develop skills useful for doing machine learning research, which is actually rather different from machine learning production and deployment because of all the cloud platforms these days. It is also slightly different from doing data scientist tasks which is more focused on exploratory data analysis. While I’ve picked up many tools along the way (such as docker, comet, flask, and sigopt), my primary focus has been on inventing new algorithms or understand the fundamentals of machine learning. For research, it is not as necessary to learn many tools (Tensorflow and python allow me to do almost everything) but it is imperative to develop a strong theoretical background.

Hopefully this post will be helpful as an example roadmap for those hoping to follow a similar path as me in their ML research skill acquisition process.

Before September 2017: Getting inspired

During CLEO 2016, the largest annual conference in optics and photonics, the plenary speaker Ray Kurzweil cast a vision of a future where AI is at the centerpiece, a general-purpose technology which harvests the multi-modal, multi-spectral information of our complex world (from hardware sensors that the optics community has strived hard to improve) to improve health diagnostics, medical treatments, space exploration, urban transporation, and more. Back at MIT, I took Antonio Torralba’s course on computer vision and loved it. At the end of the course, my project partner, Mohammad Jouni, offered me a researcher in residence gig at the consulting company EY, to which I initially turned down in favor of my original plan of continuing with a career in optical physics at NASA or SLAC National Lab. However, after 3 months of reflection, I eventually took it.

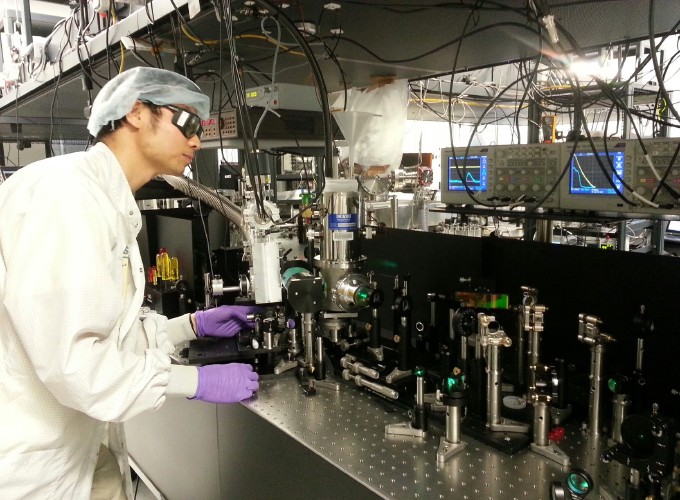

Why? I’ve always had an obsession with automation, along with the conviction that all if I had the right algorithm, most tasks can indeed be automated. One thing that kept me from exploring further was feeling that I didn’t have enough computer science background (the only coding was Matlab simulations at the time). However, it turns out that machine learning, unlike the rest of computer science, deals with continuous math, vector fields, and complex dynamics, all of which a physics background prepared me well for. Also machine learning is a highly experimental field, and I was an experimental physicist adept at using the scientific method to deduce things from empirical data. Finally, optical physics was already a mature field (my subfield of high intensity lasers won the nobel prize in 2018, which means it’s really mature), whereas AI was at the inflection point.

Before graduating from my optical physics PhD, I took Andrew Ng’s Machine Learning Coursera course. Andrew Ng’s teaching style is the traditional bottom-up approach to learning, where you start with the math. He strikes the perfect balance between mathematical rigor and brevity, all the while providing ample intuitive explanations to help the learning process.

Sep 2017 - Nov 2017: Probability and Python

While Andrew Ng’s course gave a great overview of machine learning concepts, I wanted to deepen my understanding of the building blocks of the field. Hence, learning probability theory was a must, so I took EdX’s MIT course on probability. It was a difficult course, and I laboriously did all the homeworks for the first six sections, but the concepts covered in this course provide most of the tools needed for understanding machine learning from a probabilistic perspective. It laid the groundwork for me to understand (conditional) generative models as sampling from a (conditional) distribution, training as minimizing the expectation of the loss over samples drawn the underlying distribution, and so on.

Meanwhile, I started a separate thread of learning Python, which served to give me a break from the heavy theoretical exercises and allow me to get some tactile hands-on experience. I watched all the lectures in the EdX MIT course on Python and did all the exercises. It was fun to refresh on basic computer science algorithms and to understand some programming paradigms in Python (mutability, generators, etc.) that I wouldn’t have known if I simply learned on-the-fly.

Dec 2017 - Jan 2017: NIPS and the foray into deep learning

I was one of the lucky few to get a ticket into NIPS 2017 and went into my first machine learning conference as intimidated by the crowds of people and flashy expo displays as probably most newcomers there. I however seized the opportunity to learn as much as I can from this unique experience. Typically the talks assumed too much prior knowledge. I found that the best way to learn was to engage the presenters during the poster session with back and forth questions, often asking them to give me some background into their field before diving into their research. I figured that since no one knew me at the conference, and it was already so crowded, I could let go of my shy inhibtions and maximize my learning through being bold, if not a little obnoxious :). There is something about the energy in a gathering like NIPS that allows you to acquire knowledge much more effectively than surfing through youtube videos.

Seeing all that was presented at NIPS convinced me that deep learning was the subfield of machine learning to focus on. Neural networks can essentially fit any function given enough data, and generalize mysteriously well, which makes them ideally suited for many applications.

I also debated taking the fast.ai course on deep learning, but after watching the first lecture and considering their top-down approach to teaching, I decided that spending 1.5 hours per lecture staring at someone else scroll around on their screen wasn’t the most efficient format for me to absorb useful information.

Feb 2017 - May 2017: Tensorflow and my first machine learning paper

work in progress